[This blogpost talks about our CSCW 2021 paper which you can read here]

In 2018, around the time when America’s leading conspiracy theorist1 was banned from YouTube, Apple, and

Facebook,2 Merriam-Webster noted that a shiny new verb was emerging —’to deplatform’ (/diːˈplatfɔːm/),

“the attempt to boycott a group or individual through removing the platforms (such as speaking venues or websites) used

to share information or ideas.”3

The practice has become increasingly prevalent in social media, particularly in the context of right-wing extremism4 and

of conspiracy theories around COVID-19.5

And every time a new personality or a community is kicked out of a new platform, two questions are reignited:

-

Should we deplatform?

-

What happens after we deplatform?

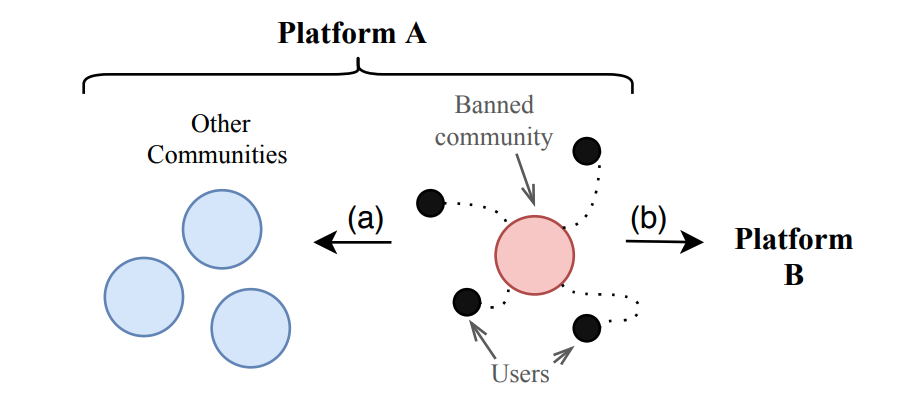

The first question is tightly related to debates on the limits of free speech and on the legitimacy of big tech to enforce moderation decisions.6 Yet from a pragmatic perspective, its answer is highly dependent on the second question…. After a group gets ‘deplatformed’ from a given social media site they can choose to participate in other communities within the same platform or they can migrate to an alternative, possibly fringe platform (or platforms) where their behavior is considered acceptable. In both scenarios, things could backfire…

Illustration of how things could backfire…

Illustration of how things could backfire…

-

Concern #1: If users migrate to other communities within the same platform, their problematic behavior/content may also migrate to other communities (existing or newly created) within the same platform.

-

Concern #2: If users migrate to an alternate platform, the ban could unintentionally strengthen a fringe platform (e.g., 4chan or Gab) where problematic content goes largely unmoderated.9 From the new platform, the harms inflicted by the toxic community on society could be even higher.

Previous work has largely addressed concern #1, showing that users who remained on Reddit after the banning of several communities in 2015 drastically reduced their usage of hate speech and that counter-actions taken by users from the banned communities were promptly neutralized.7,8 In our latest CSCW paper, we set out to explore what happens in the second scenario: when users change platforms.

What we did

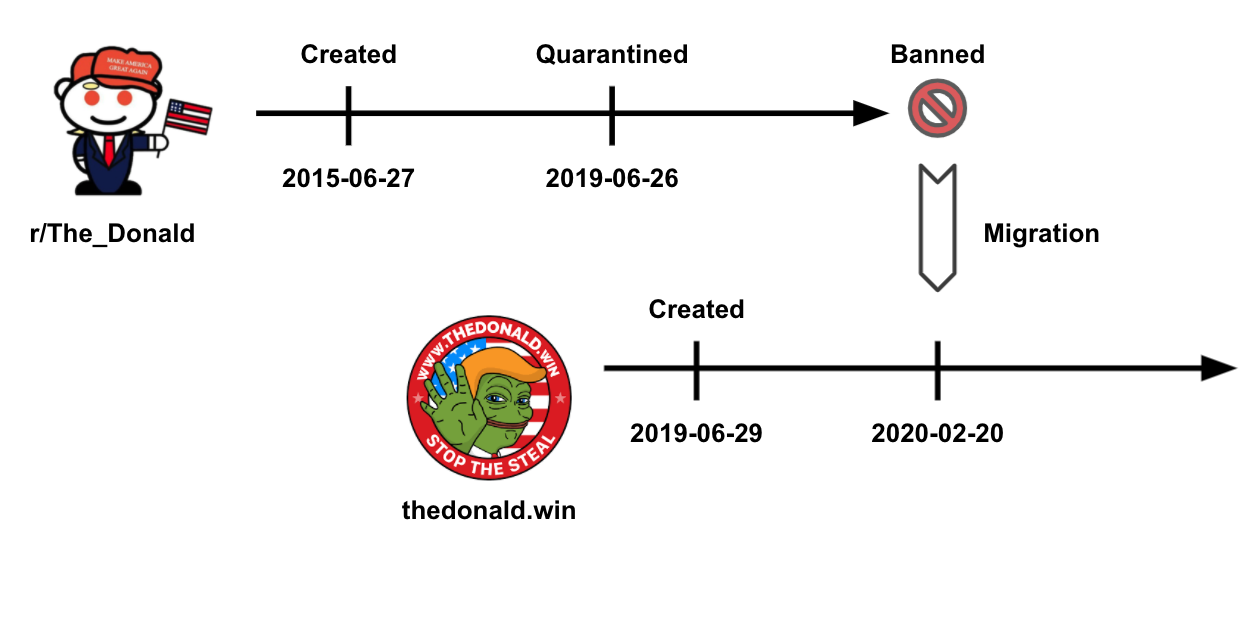

We focused on the case when users migrate (mostly) to a single alternate platform. This has happened with two prominent Reddit communities, r/The_Donald, which migrated to the standalone website thedonald.win (now patriots.win), and r/Incels, which migrated to the incels.co forum. Importantly, these migrations were “official,” in the sense that they had the backing of community leaders of the subreddit. In the case of /r/TheDonald, for instance, the community was locked for weeks, and the moderators managed to pin a post containing a link to the alternate platform.

Timeline of the deplatforming of r/The_Donald.

Timeline of the deplatforming of r/The_Donald.

We analyzed how these two communities progressed in two dimensions. First, we looked at their activity, considering how the number of posts, users and newcomers changed with the migration. Second, we looked at text-derived signals such as Toxicity Scores (thanks, Perspective API!) that would indicate user radicalization in these communities. With these signals, we showed that deplatforming a community significantly decreases community-level activity in both r/The_Donald and r/Incels. For r/The_Donald, we also find that it significantly increased radicalization-related signals.

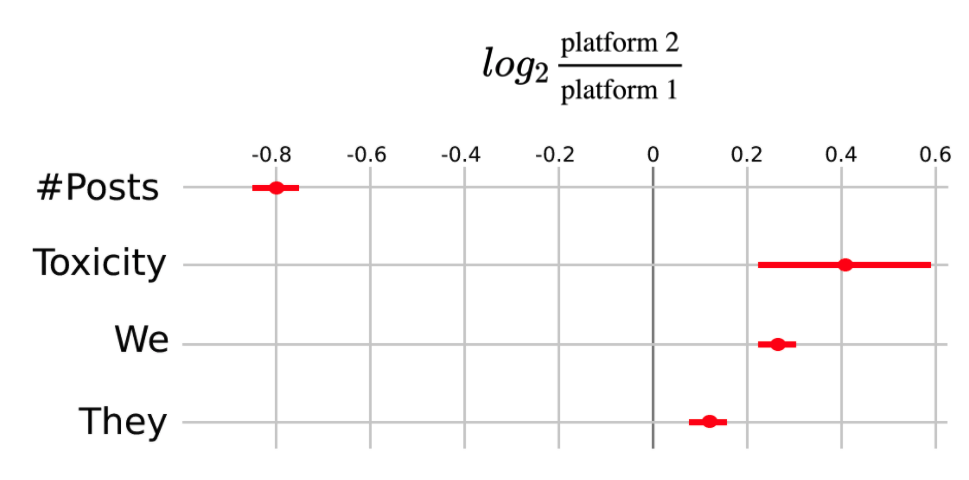

User-level effects of the migration in r/The_Donald.

User-level effects of the migration in r/The_Donald.

In the Figure above, we showcase part of our results for r/The_Donald. For each signal, in the y-axis, we show the average user log-2-ratio (x-axis). To calculate this value we: (i) match users before and after the migration and; (ii) calculate the average value for each user in the 90 days before and after the migration. Then, we divide the average value post-migration (e.g., the number of posts a day a user had in thedonald.win) by the average value pre-migration (e.g., the average value of posts a day a user had in /r/The_Donald). Lastly, we take the log2 of this ratio to make the value interpretable. For instance, if the log-2-ratio of the number of posts per user is 1, it means that the user posted twice as much in platform 2 when compared to platform 1. If it’s -2, it means that the user posted 4 times less in platform 2.

In that context, we can see in the figure that the banning of r/The_Donald led to a reduction in posts of around 40% (roughly equal to 1 - 20.8), and to an increase in Toxicity (as measured by Perspective API) of around 31%. In the paper, we also run other analyses on a community-level that yield largely the same results.

So what?

Our analysis suggests that community-level moderation measures decrease the capacity of toxic communities to retain their activity levels and attract new members, but that this may come at the expense of making these communities more toxic and ideologically radical.

As platforms moderate, they should consider their impact not only on their own websites and services, but in the context of the Web as a whole. Toxic communities respect no platform boundary, and thus, platforms should consider being more proactive in identifying and sanctioning toxic communities before they have the critical mass to migrate to a standalone website.

References

- https://nymag.com/intelligencer/2013/11/alex-jones-americas-top-conspiracy-theorist.html

- https://www.vox.com/2018/8/6/17655658/alex-jones-facebook-youtube-conspiracy-theories

- https://www.merriam-webster.com/words-at-play/the-good-the-bad-the-semantically-imprecise-08102018

- https://www.forbes.com/sites/jackbrewster/2021/01/12/the-extremists-conspiracy-theorists-and-conservative-stars-banned-from-social-media-following-the-capitol-takeover/

- https://edition.cnn.com/2021/09/01/tech/reddit-covid-misinformation-ban/index.html

- Almeida, V., Filgueiras, F., and Gaetani, F. Digital governance and the tragedy of the commons. IEEE Internet Computing (2020).

- Chandrasekharan, E., Pavalanathan, U., Srinivasan, A., Glynn, A., Eisenstein, J., and Gilbert, E. You can’t stay here: the efficacy of Reddit’s 2015 ban examined through hate speech. In Proceedings of the ACM on Human-Computer Interaction (CSCW) (2017).

- Saleem, H. M., and Ruths, D. The aftermath of disbanding an online hateful community. arXiv:1804.07354 (2018).

- Mathew, B., Illendula, A., Saha, P., Sarkar, S., Goyal, P., and Mukherjee, A. Hate begets hate: a temporal study of hate speech. In Proceedings of the ACM on Human-Computer Interaction (CSCW) (2020).

- Previously shown to be related to radicalization, see: Pennebaker, J. W., and Chung, C. K. Computerized text analysis of Al-Qaeda transcripts. A content analysis reader (2007).